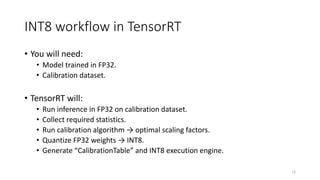

how to use tensorrt int8 to do network calibration | C++ Python. Computer Vision Deep Learning | KeZunLin's Blog

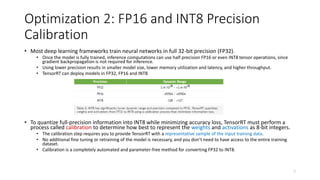

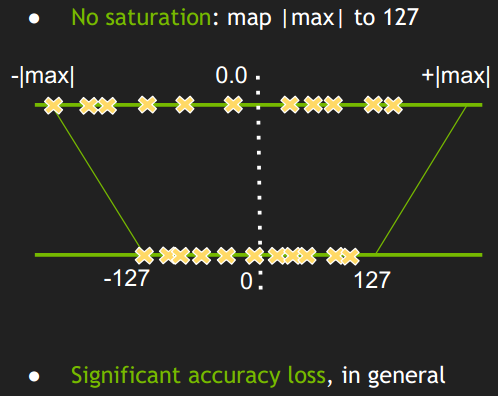

Achieving FP32 Accuracy for INT8 Inference Using Quantization Aware Training with NVIDIA TensorRT | NVIDIA Technical Blog

Achieving FP32 Accuracy for INT8 Inference Using Quantization Aware Training with NVIDIA TensorRT | NVIDIA Technical Blog

GitHub - mynotwo/yolov3_tensorRT_int8_calibration: This repository provides a sample to run yolov3 on int8 mode in tensorRT

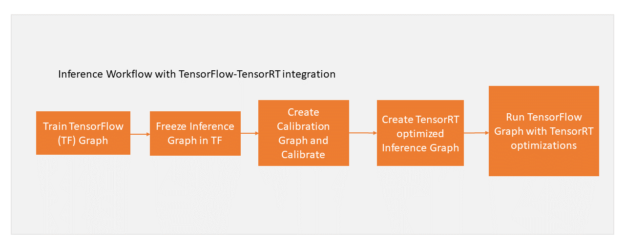

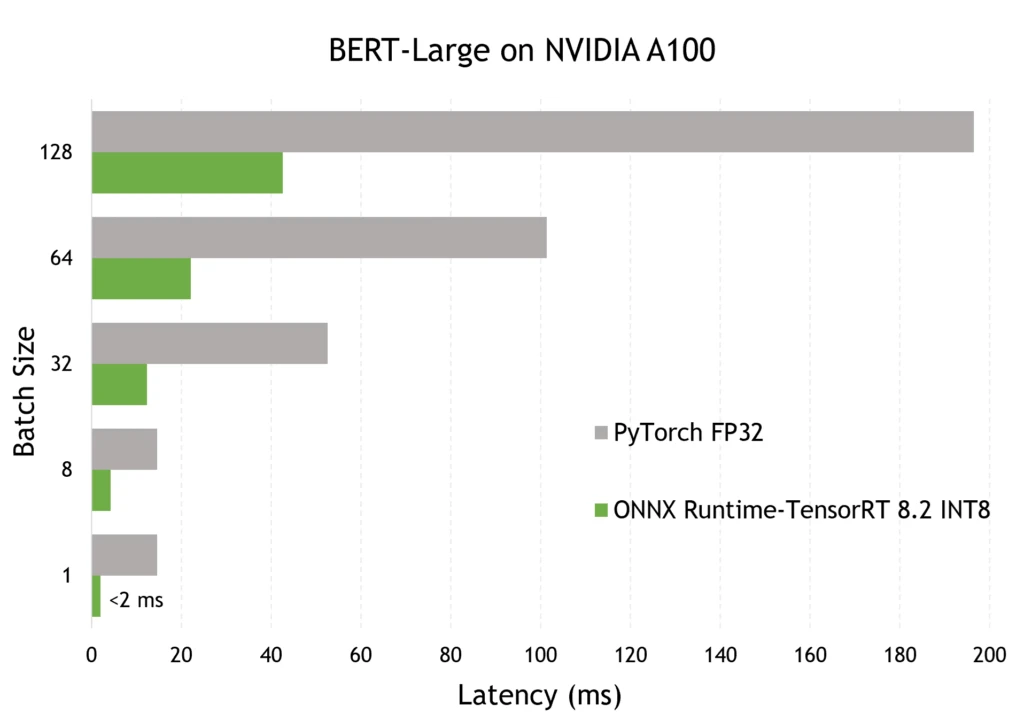

Optimizing and deploying transformer INT8 inference with ONNX Runtime- TensorRT on NVIDIA GPUs - Microsoft Open Source Blog

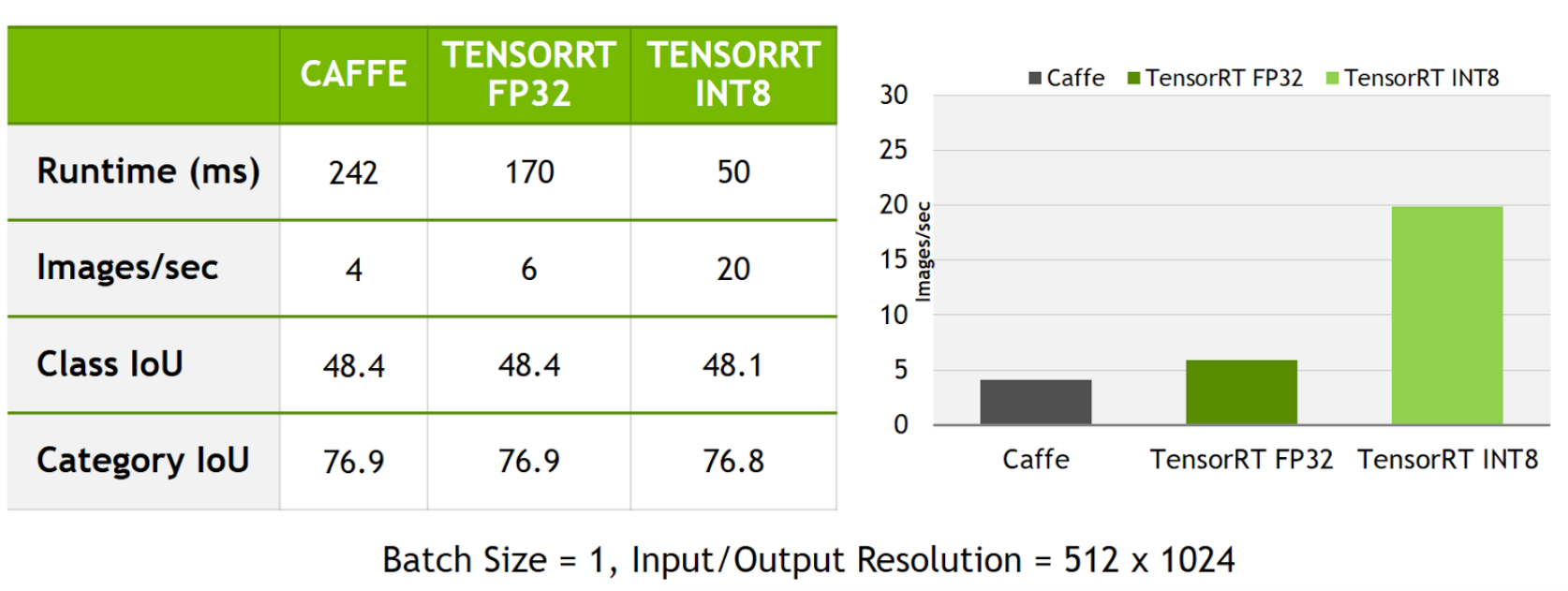

Improving INT8 Accuracy Using Quantization Aware Training and the NVIDIA TAO Toolkit | NVIDIA Technical Blog